Following my previous post on the various meanings of a customer concurrency requirement, I will try to explain why database (SQL) concurrency is usually the wrong target to set.

My main point is that database SQL concurrency is the result of both the SQL workload’s throughput (like “queries per hour”) and the database-specific latency (SQL response time). For example, I’ll demonstrate how, for a fixed workload, making a query go faster (tuning it) automatically reduces the database concurrency. This is a generic point, it is not specific to a database technology, and applies beyond the database domain.

Here is a simple example. Let us assume that a database is required to support 1800 similar queries per hour, arriving randomly. That means on average one new query every two seconds. Let us also assume that for now, each query runs on average 60 seconds, regardless of the database load (just for simplicity sake). So, given those specific query throughput and latency, the database will have about 30 concurrent SQLs running on average.

Continuing the example, let’s assume we now somehow tune the database to make these type of SQL faster and now each query execution takes only 10 seconds. If the workload is still 1800 SQLs per hour, suddenly we will only have about five concurrent SQLs! If we further tune the SQLs to execute in half a second, we will see less than one concurrent SQL – as the rate of which SQLs are submitted is much lower than each SQL run time.

What this thought exercise nicely demonstrate is that SQL concurrency is a derived metric. For a given workload requirement, which is typically a mix of different query types, each with its own throughput requirement, SQL concurrency is a result of the queries response time. So, I believe that SQL concurrency is actually a bad metric to compare systems – it is derived from the existing implementation, not from the user requirements.

To elaborate on that, let’s assume an existing system like in the example – having 30 concurrent SQLs, each running for 60 seconds (or 1800 per hour). We want a new system that supports 4x more work. Let’s say we can live with having 60s response time (faster would be a nice bonus, not a requirement). We can ask for:

- A system that supports 120 (30 x 4) concurrent sessions of these SQLs.

- A system that supports 7200 (1800 x 4) queries per hour.

When evaluating different technologies, the difference between the two can be profound. For example, let’s assume that one database technology actually runs our query in half a second. Following the first requirement will lead to sizing a system that supports 864000 queries per hour, while following the second requirement will lead to sizing a system that supports 7200 queries per hour…. That is more than two order of magnitude difference and likely a big cost difference, just due to “bad wording”.

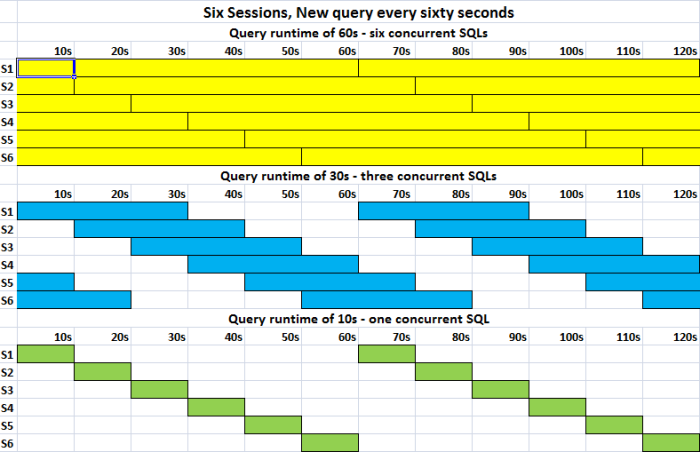

Here is a simplified visual example, this time with six concurrent sessions, each with one query per minute. It is quite easy to see that as the query response time gets smaller, so does the concurrency:

As an argument for testing high concurrency scenario, sometimes customers want to use it as a way to “push the tested systems to the limit”. In that case, I believe it is best to just increase the workload in a series of steps (+50%, 3x, 10x) and see what happens. Don’t worry, the concurrency will automatically grow as well…

To sum it up, don’t waste too much time hypothesizing about concurrency when testing databases. IT is very hard to get it right, and the numbers are not comparable across different systems. Just define your required throughput and response time and work to get there…

High concurrency does have specific negative side effects on databases, so I might revisit it in a future post 🙂

3 thoughts on “It’s not about concurrency, it’s about throughput and latency”